SPHERE: An Evaluation Card for Human-AI Systems

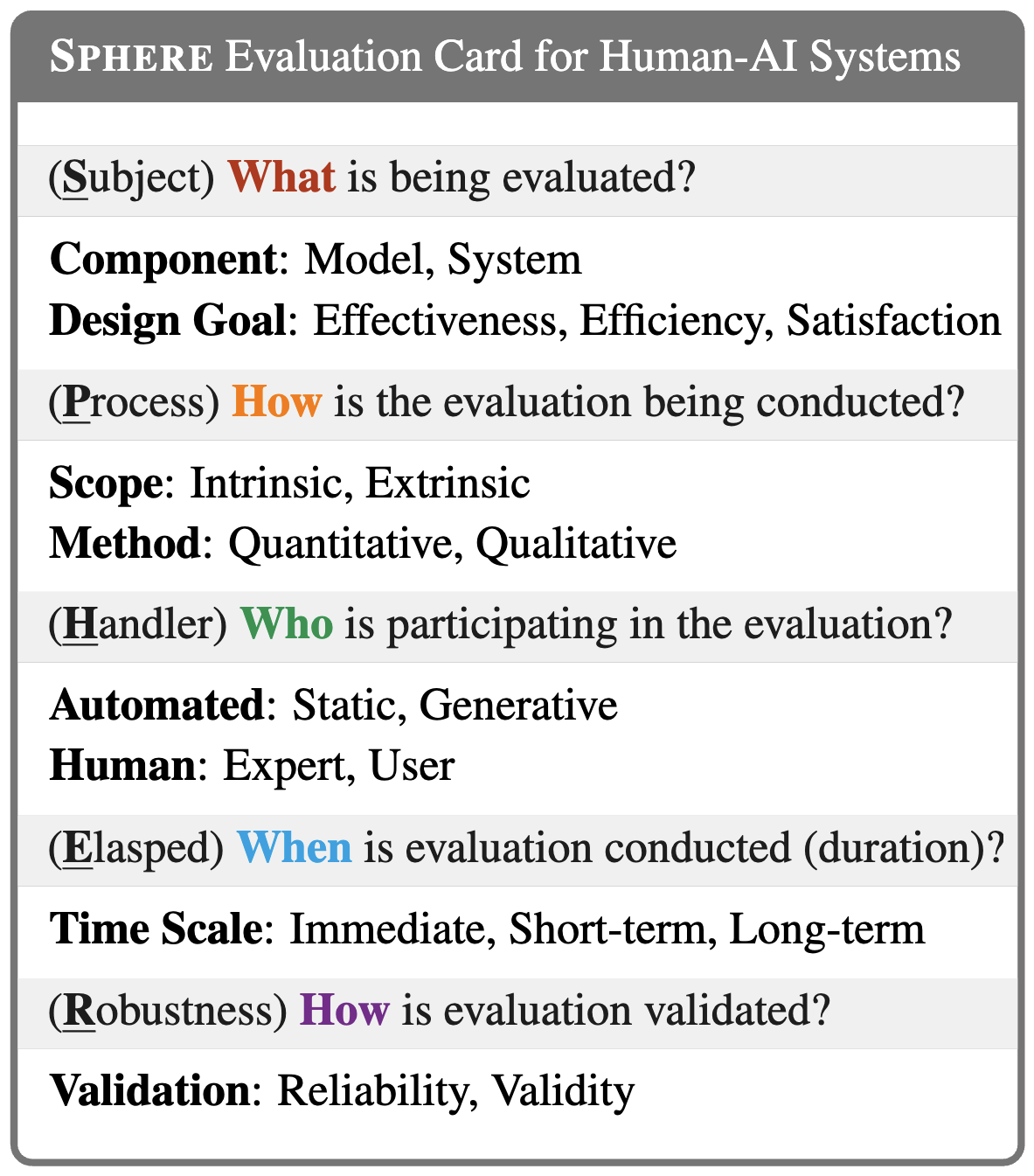

Welcome to our SPHERE evaluation card for human-AI systems! The SPHERE evaluation card consists of five dimensions (five leading questions):

- What is being evaluated?

- How is the evaluation conducted?

- Who is participating in the evaluation?

- When is evaluation conducted (duration)?

- is evaluation validated?

Within each dimension, we define category (i.e., fundamental components of an dimension) and aspect (i.e., potential options for each category). Please refer to our paper for the detailed definitions. Here we also provide an interface to help you create your own evaluation card using SPHERE. We invite the community to contribute by adding new papers, annotations, and discussions to track future developments in this space.

To create this evaluation card, we collaborated with researchers from a variety of disciplines, including Human-Computer Interaction (HCI), Natural Language Processing (NLP), Social Computing, ML fairness, and Education, and annotated 39 papers from HCI and NLP fields to understand the current landscape of human-LLM systems. We hope SPHERE offers researchers and developers a practical tool a design and document human-AI system evaluation. As a design tool, SPHERE helps structure conversations around key areas of evaluation to consider. As a documentation tool, SPHERE contributes to the transparency and reproducibility of evaluation methods.

- Want to add your paper to the list? Please either (i) export using the interface above, (ii) fill out our issue template , or (iii) create a pull request in our GitHub repository

- Have questions or found incorrect annotation? Please email Qianou Ma or Dora Zhao

Annotated Papers

Best used with a larger screen.

HCI | 2024 | system | effectiveness, satisfaction | intrinsic, extrinsic | quantitative, qualitative | expert, user | static | instant, shortterm | reliability | |

HCI | 2024 | system | effectiveness, satisfaction | extrinsic | quantitative, qualitative | user | shortterm | |||

HCI | 2024 | system | effectiveness, satisfaction | intrinsic, extrinsic | quantitative, qualitative | user | static | instant, shortterm, longterm | ||

HCI | 2024 | model, system | effectiveness, efficiency, satisfaction | intrinsic, extrinsic | quantitative, qualitative | expert, user | static | instant, shortterm | reliability | |

NLP | 2024 | model, system | effectiveness, satisfaction | intrinsic, extrinsic | quantitative | expert, user | static | instant, shortterm, longterm | ||

HCI | 2024 | system | effectiveness, efficiency, satisfaction | intrinsic, extrinsic | quantitative, qualitative | expert, user | shortterm | reliability | ||

HCI | 2024 | system | effectiveness, efficiency, satisfaction | intrinsic, extrinsic | quantitative, qualitative | user | static | instant, shortterm | validity, reliability | |

HCI | 2024 | system | efficiency, satisfaction | extrinsic | quantitative, qualitative | expert, user | instant, shortterm | validity | ||

NLP | 2024 | system | effectiveness | extrinsic | qualitative | |||||

HCI | 2024 | model, system | effectiveness, efficiency, satisfaction | intrinsic, extrinsic | quantitative, qualitative | user | instant, shortterm | validity |

Rows per page

Authors: Qianou Ma* , Dora Zhao* , Xinran Zhao, Chenglei Si, Chenyang Yang, Ryan Louie, Ehud Reiter, Diyi Yang+, Tongshuang Wu+ (*Equal contribution, +Equal contribution)

Acknowledgement: This artifact was adapted from the writing assistant evaluation card website designed by Shannon Zejiang Shen and Mina Lee.